k8s 初探,使用minikube本地测试环境(不推荐,使用k3s或者k8s)

生产环境可以用k3s,一般是使用云服务商提供的k8s服务就不用这么麻烦了

启动

# 安装2个cli命令

minikube

kubectl

# adm需要

kubernetes

# docker 也配置好

export DOCKER_HOST=tcp://127.0.0.2:39012

# 启动

# 解决 minikube start 过程中拉取镜像慢的问题

minikube delete

# minikube delete --all --purge

# --container-runtime='': The container runtime to be used. Valid options: docker, cri-o, containerd (default: auto)

# driver指定一下否则有kvm会跑到libvirt

# --driver='': Driver is one of: virtualbox, vmwarefusion, kvm2, vmware, none, docker, podman, ssh (defaults to auto-detect)

# 我是docker

minikube start --container-runtime=docker --driver=docker --image-mirror-country='cn'

# cpu只有一个的时候加上参数

--extra-config=kubeadm.ignore-preflight-errors=NumCPU --force --cpus 1

# 安装完成🏄 Done!

docker ps

# CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

# 22442f4c59d2 registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase:v0.0.30 "/usr/local/bin/entr…" 53 seconds ago Up 52 seconds 0.0.0.0:49162->22/tcp, 0.0.0.0:49161->2376/tcp, 0.0.0.0:49160->5000/tcp, 0.0.0.0:49159->8443/tcp, 0.0.0.0:49158->32443/tcp minikube

# [leng@nixos:~]$ docker images

# REPOSITORY TAG IMAGE ID CREATED SIZE

# registry.cn-hangzhou.aliyuncs.com/google_containers/kicbase v0.0.30 1312ccd2422d 8 months ago 1.14GB

# 查看状态

minikube status

# 查看ip

minikube ip

# 网页端dashboard

minikube dashboard

# 节点相关

minikube node list

# minikube 192.168.49.2

# 端口暴露

minikube tunnel

# 进入到minikube容器里面,里面有一个docker-in-docker

docker exec -it minikube bash

docker ps

# ...

docker images

# REPOSITORY TAG IMAGE ID CREATED SIZE

# registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver v1.23.3 f40be0088a83 9 months ago 135MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager v1.23.3 b07520cd7ab7 9 months ago 125MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler v1.23.3 99a3486be4f2 9 months ago 53.5MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy v1.23.3 9b7cc9982109 9 months ago 112MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/etcd 3.5.1-0 25f8c7f3da61 12 months ago 293MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/coredns/coredns v1.8.6 a4ca41631cc7 13 months ago 46.8MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/coredns v1.8.6 a4ca41631cc7 13 months ago 46.8MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/pause 3.6 6270bb605e12 14 months ago 683kB

# registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetesui/dashboard v2.3.1 e1482a24335a 17 months ago 220MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/dashboard <none> e1482a24335a 17 months ago 220MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetesui/metrics-scraper v1.0.7 7801cfc6d5c0 17 months ago 34.4MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-scraper <none> 7801cfc6d5c0 17 months ago 34.4MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/k8s-minikube/storage-provisioner v5 6e38f40d628d 19 months ago 31.5MB

# registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner v5 6e38f40d628d 19 months ago 31.5MB

pod 在k8s里面的最小服务单位,区别与container,一个可以pod包含多个container,可以直接进行本地网络通信

deployment 管理pod

在生产环境中,我们基本上不会直接管理 pod,deployment可以实现对pod的管理,扩容,健康检查,平缓重启等

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: leng-deployment

spec:

replicas: 3

selector:

matchLabels:

app: leng

template:

metadata:

labels:

app: leng

spec:

containers:

- image: docker.io/library/caddy:latest

name: caddy-container

# 运行

kubectl apply -f deployment.yaml

# 删除

kubectl delete -f deployment.yaml

# deployment.apps "leng-deployment" deleted

# 状态,READY是0/3,没起来,等一会

kubectl get deployments

# NAME READY UP-TO-DATE AVAILABLE AGE

# demo-deployment 0/3 1 0 64s

# 删除全部

# kubectl delete deployment,service --all

# 查看下面管理的pod

kubectl get pod

# 临时把端口80隐射到本地8080,访问测试

kubectl port-forward --address 0.0.0.0 leng-deployment-5b99cc98d-svdpw 8080:80

# 查看单个pod详情

kubectl describe pod leng-deployment-5b99cc98d-svdpw

# 删除

kubectl delete pod leng-deployment-5b99cc98d-svdpw

# 进入pod

kubectl exec -it lengadmin-deployment-6c76f49dc4-bnxfg -- sh

# 私有镜像需要配置imagePullSecrets

# 使用 base64 编码方法对 Docker 配置文件进行编码,然后粘贴该字符串的内容,作为字段 data[".dockerconfigjson"] 的值(去掉空格,回车)

# https://kubernetes.io/zh-cn/docs/tasks/configure-pod-container/pull-image-private-registry/

cat ~/.docker/config.json

base64 ~/.docker/config.json

apiVersion: v1

kind: Secret

metadata:

name: registrykey-jihulab

namespace: default

type: kubernetes.io/dockerconfigjson

data:

.dockerconfigjson: ewoJImF1dGhzIjo......mF1dGh

Service 负载均衡/管理

service.yaml

apiVersion: v1

kind: Service

metadata:

name: service-demo

spec:

type: ClusterIP

selector:

app: leng

ports:

- port: 80

targetPort: 80

# 运行

kubectl apply -f service.yaml

# 查看(service-demo)

kubectl get endpoints

# NAME ENDPOINTS AGE

# kubernetes 192.168.49.2:8443 69m

# service-demo 172.17.0.4:80,172.17.0.5:80,172.17.0.6:80 18s

# 访问ip

kubectl get service

# 可以手动启动

# minikube service first-service

# 多主机使用NodePort(端口范围在 30000-32767)可以直接负载均衡将服务的pods暴露到公网

# 然后使用ingress配合NodePort(多主机)/ClusterIP(单主机)进行负载均衡

# 临时暴露地址80到实体机的8080,实现外网访问(测试没有跑到负载均衡,而是默认一个比较奇怪,ingress测试是正常的)

kubectl port-forward --address 0.0.0.0 service/first-service 8080:80

# 也可以使用externalIPs直接对服务进行外网暴露就可以无需ingress路由进行负载均衡(还是需要nginx端口转发)

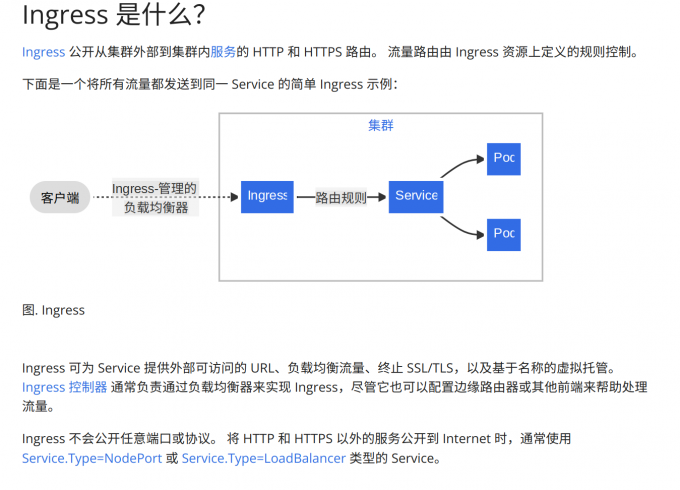

ingress 入口,系统需要已经存在的ingress-controller,比如ingress-nginx/traefik,才可使用

kind: Ingress

# k3s 自带了traefik,可以直接使用

kubectl get svc --all-namespaces

# NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# default kubernetes ClusterIP 10.43.0.1 <none> 443/TCP 24h

# kube-system kube-dns ClusterIP 10.43.0.10 <none> 53/UDP,53/TCP,9153/TCP 24h

# kube-system metrics-server ClusterIP 10.43.67.144 <none> 443/TCP 24h

# kube-system traefik LoadBalancer 10.43.69.161 172.23.186.253 80:30158/TCP,443:32691/TCP 24h

# 开启nginx-ingress-controller

minikube addons enable ingress

# ▪ Using image registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

# ▪ Using image registry.cn-hangzhou.aliyuncs.com/google_containers/nginx-ingress-controller:v1.1.1

# ▪ Using image registry.cn-hangzhou.aliyuncs.com/google_containers/kube-webhook-certgen:v1.1.1

# 🔎 Verifying ingress addon...

# 🌟 启动 'ingress' 插件

# 查看状态

kubectl get pods -n ingress-nginx

# 可查看配置文件

kubectl exec -it -n ingress-nginx ingress-nginx-controller-6cfb67d797-pzcdp -- cat /etc/nginx/nginx.conf

kubectl get ingress

# NAME CLASS HOSTS ADDRESS PORTS AGE

# one-ingress nginx www.o.net 192.168.49.2 80 41s

# 详情

kubectl describe ingress one-ingress

# 访问,是k8s的地址

curl http://192.168.49.2:80

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: one-ingress

spec:

rules:

- host: www.o.net

http:

paths:

- backend:

service:

name: service-demo

port:

number: 80

path: /

pathType: Prefix

# 通过www.o.net访问

案例一: 单节点/单服务/热更新

deployment.yaml service.yaml ingress.yaml

创建本地多节点

minikube node add

minikube node list

# minikube 192.168.49.2

# minikube-m02 192.168.49.3

minikube status

# minikube

# type: Control Plane

# host: Running

# kubelet: Running

# apiserver: Running

# kubeconfig: Configured

# minikube-m02

# type: Worker

# host: Running

# kubelet: Running

# 部署需要在containers同级增加nodeName,不指定的话是随机节点的进行创建

nodeName: minikube-m02

containers:

# 注意Deployment的 metadata/name 也需要变更,不然以为是服务编辑到另一个节点了

手动加入节点

# 生成加入节点的命令

kubeadm token create --print-join-command

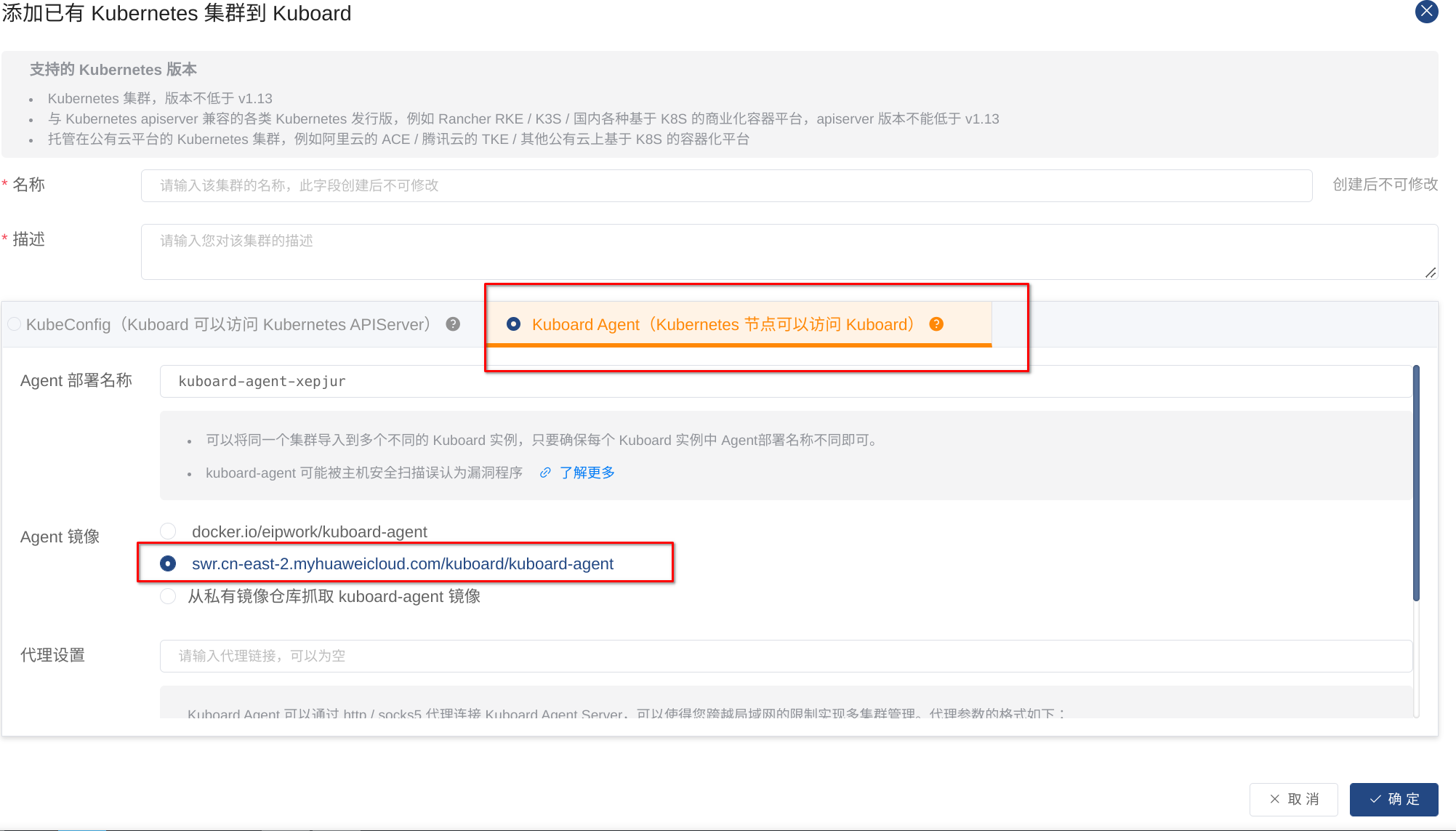

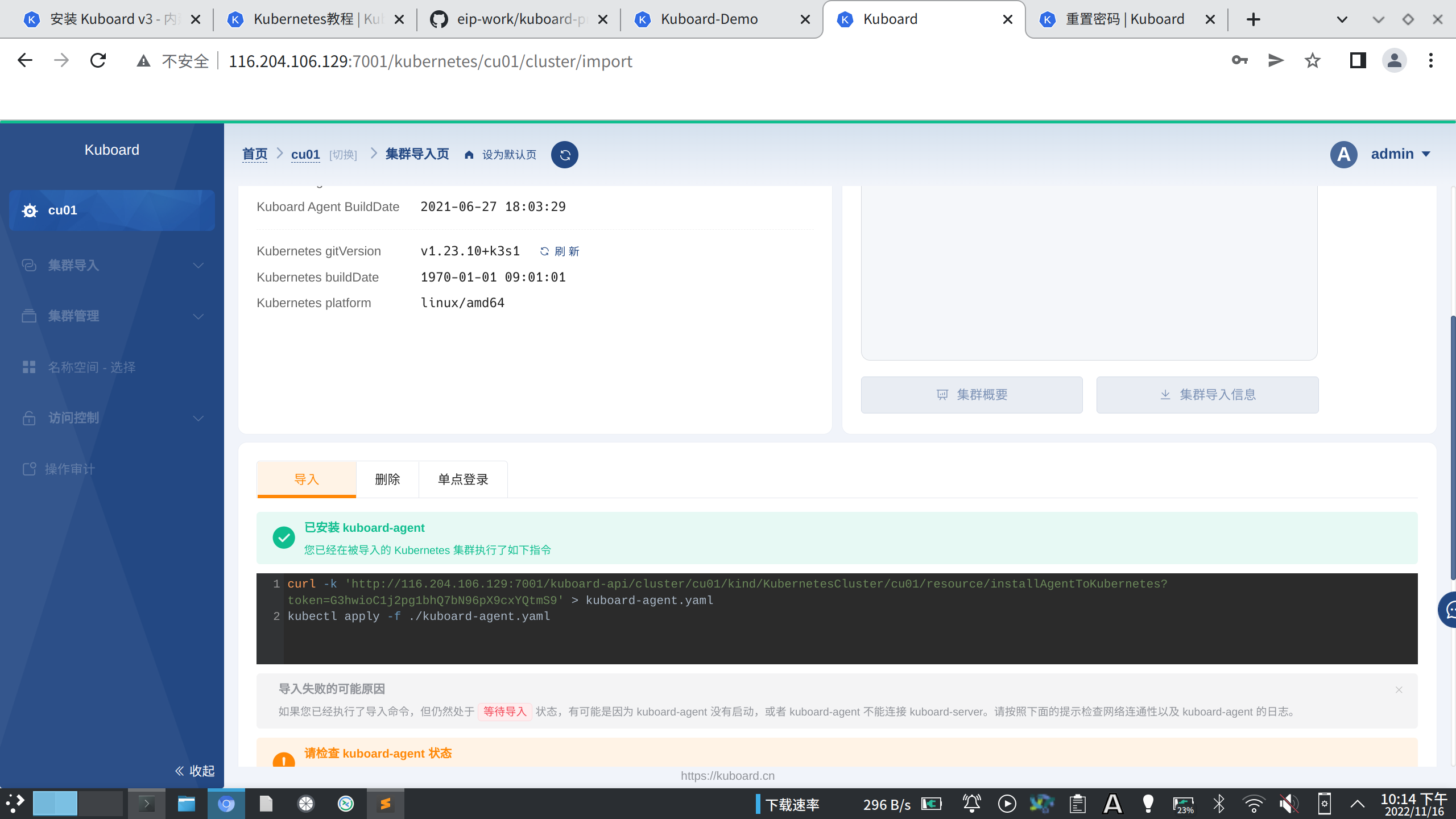

kuboard国产控制面板安装 https://www.kuboard.cn/install/v3/install-built-in.html

# 独立的主机用docker安装, v3:

docker run -itd \

--name=kuboard \

-p 7001:80/tcp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://[内网/公网]:7001" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-v /home/jcleng/kuboarddata:/data \

-e KUBOARD_ADMIN_DERAULT_PASSWORD="Kuboard123" \

swr.cn-east-2.myhuaweicloud.com/kuboard/kuboard:v3

# 访问

http://[内网/公网]:7001/

# 使用代理agent模式添加集群

ingress小技巧

# 创建ingress可以先创建IngressClass

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

annotations:

k8s.kuboard.cn/managed-by-kuboard: 'true'

name: default-ingress

resourceVersion: '32509'

spec:

controller: k8s.io/ingress-nginx

# 然后在ingress使用

spec:

ingressClassName: default-ingress

# 查看ingress-nginx命名空间的svc都是[默认都是映射的80端口],所以我们创建svc的时候port选80才能实现(targetPort还是不变容器内端口)

kubectl get svc -n ingress-nginx

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# ingress-nginx-controller-admission-ingress-nginx ClusterIP 10.43.15.179 <none> 443/TCP 12h

# ingress-nginx-controller-ingress-nginx NodePort 10.43.189.100 <none> 80:30377/TCP,443:31705/TCP 12h

# ingress-nginx-controller-admission-default-ingress ClusterIP 10.43.130.16 <none> 443/TCP 16m

# ingress-nginx-controller-default-ingress NodePort 10.43.88.164 <none> 80:31509/TCP,443:32539/TCP 16m

# 创建的ingress用host(直接修改hosts也行)指定域名: 就可以直接访问域名了

spec:

ingressClassName: default-ingress

rules:

- host: localhost2.com

# 创建ingress实质会在ingress-nginx命名控件里面创建一个NodePort的svc

磁盘占用

sudo du -hd 1 /var/lib

# 4.0K ./machines

# 24K ./nixos

# 7.5G ./docker

# 4.0K ./private

# 4.0K ./udisks2

# 2.2G ./rancher

# 888K ./kubelet

# 224K ./cni

# 1.1G ./containers

# 184K ./systemd

# 11G ./

# k3s

/var/lib/rancher/k3s/agent/containerd

# docker

/var/lib/docker

# 清除docker的未使用的镜像,-a 全部

docker system prune -a

k8s使用containerd非docker的话可以crictl管理k8s的数据

nix-shell -p cri-tools

sudo crictl -r /run/k3s/containerd/containerd.sock ps

sudo crictl -r /run/k3s/containerd/containerd.sock img

# 如果只是使用containerd的话,用ctr进行管理

# nix-shell -p containerd

# sudo ctr -a /run/k3s/containerd/containerd.sock i list

# docker-in-docker是/run/containerd/containerd.sock

sudo ctr -a /var/run/docker/containerd/containerd.sock ns list

sudo ctr -a /var/run/docker/containerd/containerd.sock -n moby c ls

# 看不出来容器名称,是docker创建到containerd里面的

# CONTAINER IMAGE RUNTIME

# 0796d21d7577a390087978ccf8d93f532d8452392e37e393578d2e29863fed97 - io.containerd.runc.v2

webapi调用

# https://kubernetes.io/zh-cn/docs/reference/kubernetes-api/workload-resources/deployment-v1/#patch-%E9%83%A8%E5%88%86%E6%9B%B4%E6%96%B0%E6%8C%87%E5%AE%9A%E7%9A%84-deployment

# 获取api地址: cluster/server的地址

cat ~/.kube/config

# 创建serviceaccount帐户获取token

kubectl create serviceaccount jcleng

# 查看列表

kubectl get sa

# 查看token

kubectl describe secret jcleng

# 最后需要配置role

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: jcleng

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: jcleng

namespace: default

### 测试返回APIVersions

GET https://0.0.0.0:49154/api

Authorization: Bearer 获取的token

### 获取pods

GET https://0.0.0.0:49154/api/v1/namespaces/default/pods

Authorization: Bearer 获取的token

### 获取deployment

GET https://0.0.0.0:49154/apis/apps/v1/deployments

Authorization: Bearer 获取的token

### patch部分数据,变更replicas

### https://kubernetes.io/zh-cn/docs/reference/kubernetes-api/workload-resources/deployment-v1/#patch-%E9%83%A8%E5%88%86%E6%9B%B4%E6%96%B0%E6%8C%87%E5%AE%9A%E7%9A%84-deployment

PATCH https://0.0.0.0:49154/apis/apps/v1/namespaces/default/deployments/testhold-deployment

Content-Type: application/json-patch+json

Authorization: Bearer 获取的token

[

{

"op": "replace",

"path": "/spec/replicas",

"value": 3

}

]

### patch部分数据,变更image

### https://kubernetes.io/zh-cn/docs/reference/kubernetes-api/workload-resources/deployment-v1/#patch-%E9%83%A8%E5%88%86%E6%9B%B4%E6%96%B0%E6%8C%87%E5%AE%9A%E7%9A%84-deployment

PATCH https://0.0.0.0:49154/apis/apps/v1/namespaces/default/deployments/testhold-deployment

Content-Type: application/json-patch+json

Authorization: Bearer 获取的token

[

{

"op": "replace",

"path": "/spec/template/spec/containers/0/image",

"value": "registry.jihulab.com/jcleng/test_hold:v1-4"

}

]

ingress-nginx灰度发布

# 文档 https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/annotations/#canary

# 复制 Deployment/Service/Ingress 3个出来,app名称对应修改修改为新的应用

# 最主要是修改Ingress, .spec.rules.host保持不变, service.name修改为灰度的服务名称

# 先安装k8s.io/ingress-nginx作为IngressClass,在用ingressClassName指定nginx

# 灰度ingress

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: canary

annotations:

# We are defining this annotation to prevent nginx

# from redirecting requests to `https` for now

nginx.ingress.kubernetes.io/ssl-redirect: "false"

# 设置灰度

nginx.ingress.kubernetes.io/canary: "true"

# canary请求头设置为 always/never

nginx.ingress.kubernetes.io/canary-by-header: "canary"

spec:

# 使用traefik注释掉ingressClassName

ingressClassName: nginx

# 访问测试,请求头加上canary: always即可生效

GET https://www.xxxx.icu/

canary: always

# 请求的数据就是正式服的数据,灰度测试没有问题,就可以把主服务的image版本直接更新即可

kubectl get ing

# NAME CLASS HOSTS ADDRESS PORTS AGE

# canary nginx www.xxxx.icu 192.168.0.7 80 27m

# stable nginx www.xxxx.icu 192.168.0.7 80 47m

cri-tools: CLI and validation tools for Kubelet Container Runtime Interface (CRI) .

# https://github.com/kubernetes-sigs/cri-tools

crictl --runtime-endpoint=unix:///run/k3s/containerd/containerd.sock images

IMAGE TAG IMAGE ID SIZE

# docker.io/anjia0532/google-containers.ingress-nginx.controller v1.6.4 7744eedd958ff 114MB

# docker.io/anjia0532/google-containers.ingress-nginx.kube-webhook-certgen v20220916-gd32f8c343 520347519a8ca 19.7MB

# docker.io/library/caddy latest d8464e23f16f9 17.5MB

# docker.io/library/nginx 1.16.0 ae893c58d83fe 44.8MB

# docker.io/rancher/klipper-helm v0.7.4-build20221121 6f2af12f2834b 92MB

# docker.io/rancher/klipper-lb v0.4.0 3449ea2a2bfa7 3.77MB

# docker.io/rancher/local-path-provisioner v0.0.23 9621e18c33880 13.9MB

# docker.io/rancher/mirrored-coredns-coredns 1.9.4 a81c2ec4e946d 15.1MB

# docker.io/rancher/mirrored-library-traefik 2.9.4 288889429becf 38.7MB

# docker.io/rancher/mirrored-metrics-server v0.6.2 25561daa66605 28.1MB

# docker.io/rancher/mirrored-pause 3.6 6270bb605e12e 301kB

# 可以手动拉镜像

crictl --runtime-endpoint=unix:///run/k3s/containerd/containerd.sock pull caddy

crictl --runtime-endpoint=unix:///run/k3s/containerd/containerd.sock rmi d8464e23f16f9

# Deleted: docker.io/library/caddy:latest

export CONTAINER_RUNTIME_ENDPOINT=unix:///run/k3s/containerd/containerd.sock

crictl ps

关于LoadBalancer https://docs.k0sproject.io/v1.22.1+k0s.0/examples/nginx-ingress/

# 当服务暴露是LoadBalancer类型的时候,服务的EXTERNAL-IP正确应该是服务器的公网ip

# 如果不是公网ip(<pending>),服务将不可访问

# externalIPs:

# - 192.168.122.74

# 192.168.122.74就是公网ip,这里是虚拟机,也就是虚拟机的ip

kubectl -n ingress-nginx get svc

# NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

# ingress-nginx-controller-admission ClusterIP 10.43.200.98 <none> 443/TCP 2d18h

# ingress-nginx-controller LoadBalancer 10.43.141.45 192.168.122.74 80:31210/TCP,443:30475/TCP 2d18h

Kind 搭建本地k8s测试环境

kind 是一个使用 Docker 容器“节点”运行本地 Kubernetes 集群的工具。 kind 主要用于测试 Kubernetes 本身,但也可用于本地开发或 CI。

nix-shell -p kind

# 推荐不使用默认的latest,而使用历史版本: https://hub.docker.com/r/kindest/node/tags

kind create cluster --image kindest/node:v1.20.15

docker ps|grep kind

# 4c4cef51051d kindest/node:v1.20.15 "/usr/local/bin/entr…" 22 minutes ago Up 22 minutes 127.0.0.1:33423->6443/tcp kind-control-plane

# 查看

kind get clusters

# 删除

kind delete cluster

# 查看集群信息

kubectl cluster-info --context kind-kind

kubectl get svc

# NAME STATUS ROLES AGE VERSION

# kind-control-plane Ready control-plane,master 51s v1.20.15

配置ingress-nginx

# 配置文件选择对应的tag: https://github.com/kubernetes/ingress-nginx/blob/controller-v1.6.4/deploy/static/provider/cloud/deploy.yaml

# 下载镜像,进行替换文件的镜像: https://github.com/anjia0532/gcr.io_mirror/issues?q=ingress-nginx%2Fcontroller%3Av1.6.4

# 最好把镜像的名称后面的sha256校验删除

kubectl apply -f deploy.yaml

# kubectl delete -f deploy.yaml

# ingress-nginx命名空间的数据

watch -n1 kubectl get all -n ingress-nginx

# 失败原因

kubectl describe pod/ingress-nginx-admission-create-6fp97 -n ingress-nginx

# 最重要的是创建了一个nginx的ingressClass, 然后使用kind: Ingress的时候配置ingressClassName: nginx才能指定到当前安装的ingress-nginx负载均衡器

# 访问docker的集群容器的ip

docker inspect kind-control-plane|grep IPAddress

# 如果service/ingress-nginx-controller没有获取到EXTERNAL-IP, 手动编辑文件新增:

kubectl edit service/ingress-nginx-controller -n ingress-nginx

# 在spec:里面新增

externalIPs:

- 172.20.0.2

# 访问

curl http://172.20.0.2:80

# 使用caddy或者port-forward把本地端口代理到容器的端口

nix-shell -p caddy

caddy reverse-proxy --from :2080 --to 172.20.0.2:80

curl http://localhost:2080/

# 比如安装kubernetes-dashboard

kubectl port-forward svc/kubernetes-dashboard --address 0.0.0.0 28015:443

curl https://127.0.0.1:28015

kubectl create clusterrolebinding default-admin --clusterrole cluster-admin --serviceaccount=default:default

## 域名访问

curl --resolve chart-example.local:80:172.20.0.2 http://chart-example.local